HashiCorp Vault on Production-ready Kubernetes: Complete Architecture Guide

HashiCorp Vault on Production-ready Kubernetes: Complete Architecture Guide

10 September 2025

Kilian Niemegeerts

You’re running Kubernetes in production. Secrets are everywhere – database passwords, API keys, certificates. You can’t commit them to Git, but your GitOps pipeline needs them. Meanwhile, your HashiCorp Vault instance goes down because it’s fighting for resources with your application pods during traffic spikes.

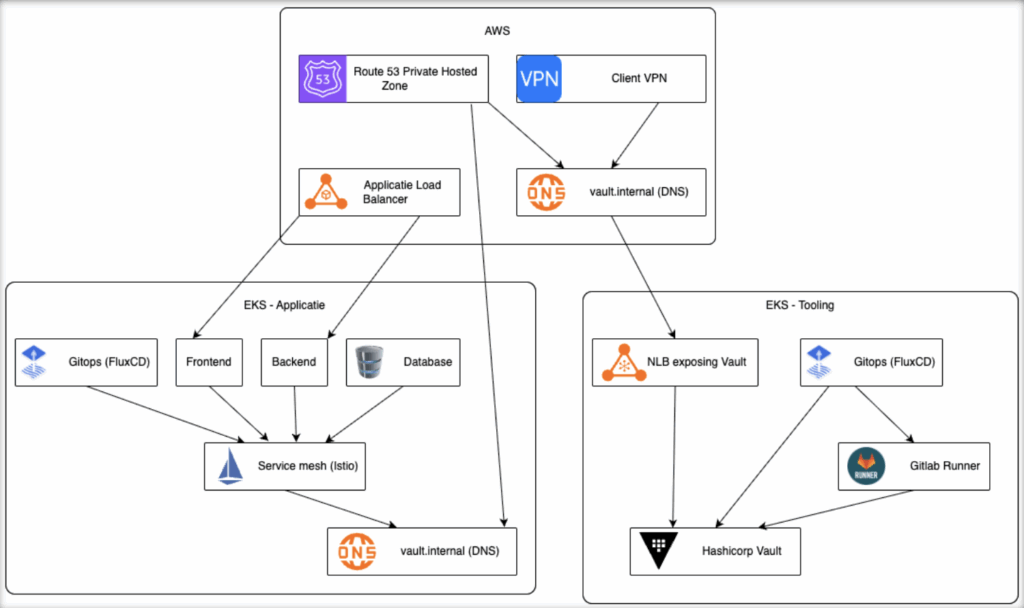

We built a multi-cluster Kubernetes architecture on AWS that solves these problems. Critical infrastructure runs separately, secrets stay out of Git and everything deploys automatically.

This is part 1 of our 6-part series documenting the implementation.

- Production Kubernetes Architecture with HashiCorp Vault

- Terraform Infrastructure for HashiCorp Vault on EKS

- External Secrets Operator: GitOps for Kubernetes Secrets

- Dynamic PostgreSQL Credentials with HashiCorp Vault

- Vault Agent vs Secrets Operator vs CSI Provider

- Securing Vault Access with Internal NLB and VPN

HashiCorp Vault Multi-Cluster Kubernetes Architecture

Our production-ready Vault infrastructure on Kubernetes splits into two EKS clusters:

- Application Cluster: Frontend, backend, databases, Istio service mesh

- Tooling Cluster: HashiCorp Vault, GitLab Runner, External Secrets Operator, FluxCD

Why split them? Because mixing critical infrastructure with applications creates problems.

Why Separate Clusters for HashiCorp Vault

Different Scaling Needs

Applications scale by adding or removing pods based on traffic. Vault doesn’t. It needs consistent resources and high availability. Technically, Kubernetes gives you tools to isolate workloads within a single cluster, using node pools, resource limits, taints, and affinity rules. So yes, you can avoid resource competition in a shared cluster.

But in practice, it’s just easier to manage scaling separately. Separate clusters mean simpler setup and less room for mistakes when both workloads behave differently.

Update Cycles Don’t Match

Application deployments happen continuously. Vault upgrades need planning and testing. In the same cluster, you can update Vault separately from your apps but not the cluster itself. If you want to update the Kubernetes version, everything in that cluster is affected. Separate clusters let us upgrade Vault independently. For example: we update the Vault cluster on Tuesday without blocking Thursday’s feature release in the app cluster.

Clear Security Boundaries

With one cluster, RBAC policies become complex – separating developers from infrastructure tools is hard. Two clusters mean different IAM roles, different policies, straightforward security.

Infrastructure Stays Up

If an application goes down, Vault shouldn’t go with it. In the same cluster, they share the same fate when the cluster itself has issues. With separate clusters, a crash in production doesn’t impact our secrets management.

Terraform Infrastructure for Vault on Kubernetes

Manual infrastructure creates drift between environments in production Kubernetes. You deploy to dev, it works. You deploy to production three weeks later – different configs, different versions, different results. We need everything automated, versioned, and repeatable.That’s where Terraform comes in.

Our Terraform modules cover 80% of the most common Vault use cases without making things overly complex.

modules/ ├── vpc/ # Networks per cluster ├── iam/ # Roles and policies ├── kms/ # Vault auto-unseal keys ├── efs/ # Vault storage └── eks/ # Cluster configs

Each cluster gets its own configuration that uses these modules with different parameters.

Secret Management Patterns in Kubernetes

Kubernetes secrets have a fundamental problem: GitOps means everything in Git, but secrets in Git mean security risks. HashiCorp Vault solves this, but introduces new questions: How do pods get secrets from Vault?

We use different patterns for different needs:

Static Secrets: External Secrets Operator

API keys and config values that rarely change use ESO. It syncs from Vault to Kubernetes Secrets without storing values in Git.

Dynamic Credentials: Database Engine

Vault creates PostgreSQL users on-demand with 1-hour lifetimes:

CREATE ROLE "{{name}}" WITH LOGIN PASSWORD '{{password}}' VALID UNTIL '{{expiration}}'

There are no shared passwords and no rotation tickets.

Vault Agent vs Secrets Operator vs CSI Provider

When you have HashiCorp Vault running, you still need to get those secrets into your applications. HashiCorp offers three approaches, each with trade-offs:

Vault Agent Injector: Adds a sidecar container that authenticates with Vault and writes secrets to a shared volume. Perfect for dynamic secrets that need automatic renewal.

Vault Secrets Operator: Creates native Kubernetes Secrets from Vault data. Great for GitOps but can’t handle dynamic credentials.

CSI Provider: Mounts secrets as volumes during pod startup. Newer approach with good flexibility.

We chose Vault Agent for dynamic database credentials because it handles lease renewal automatically – critical when credentials expire every hour.

Kubernetes Production Security with Vault

With secrets managed by Vault and infrastructure automated, we still needed to secure the runtime. Two critical questions:

- How do services communicate securely within the cluster?

- How do engineers access Vault without exposing it publicly?

Istio Service Mesh for Vault Security

In traditional Kubernetes, any pod can talk to any pod. That’s a security nightmare. We implemented Istio service mesh where every connection must be:

- Encrypted (automatic mTLS)

- Authenticated (service identity)

- Authorized (explicit policies)

Example from our HashiCorp Vault Kubernetes setup, only the assets service can talk to ActiveMQ:

apiVersion: security.istio.io/v1

kind: AuthorizationPolicy

metadata:

name: allow-assets-to-activemq

spec:

selector:

matchLabels:

app: activemq

action: ALLOW

rules:

- from:

- source:

principals: ["cluster.local/ns/app/sa/assets-sa"]

Securing Vault Access in Production

Vault contains all secrets – it must never be publicly accessible. Our solution:

- Internal Load Balancer: No public IP exists

- Private DNS: Clean URL via vault.tooling.internal

- VPN Access: Engineers connect through VPN when needed

This means Vault is invisible to the internet but accessible where needed.

GitOps Deployment with FluxCD

Infrastructure is automated with Terraform. But how do applications deploy to our Kubernetes clusters? That’s where GitOps comes in.

FluxCD watches our Git repositories and automatically deploys changes to both clusters. Update a container image? Commit to Git. Change a configuration? Commit to Git. No manual kubectl commands, no confusion about what’s deployed where.

GitLab Runner with Podman in Kubernetes

Building containers needs special attention in production-ready Kubernetes. Docker requires privileged mode – a security risk. We chose Podman for rootless builds, deployed via GitLab Runner in the tooling cluster. Even the runner registration token comes from Vault.

Getting Started with HashiCorp Vault on Kubernetes

All code from this production architecture is available in our GitHub repository:

- Complete Terraform modules

- Working Vault configurations

- Bootstrap scripts and fixes

- GitOps manifests

Need help implementing HashiCorp Vault in your Kubernetes environment? Our team has hands-on experience with production deployments. Contact FlowFactor to discuss your infrastructure challenges.

Sorry, the comment form is closed at this time.