Setting up OpenShift prerequisites

Setting up OpenShift prerequisites

15 December 2020

Niel De Boever

In this article, I will go over how to set up the OpenShift hardware and prerequisites before installing the container platform. For the sake of this tutorial, I will be using VMware vSphere as infrastructure provider but there shouldn’t be much of a difference when using other hardware platforms.

We will set up the following:

- DNS server

- DHCP server

- HAProxy as API/ingress load balancer

Setting up the hardware

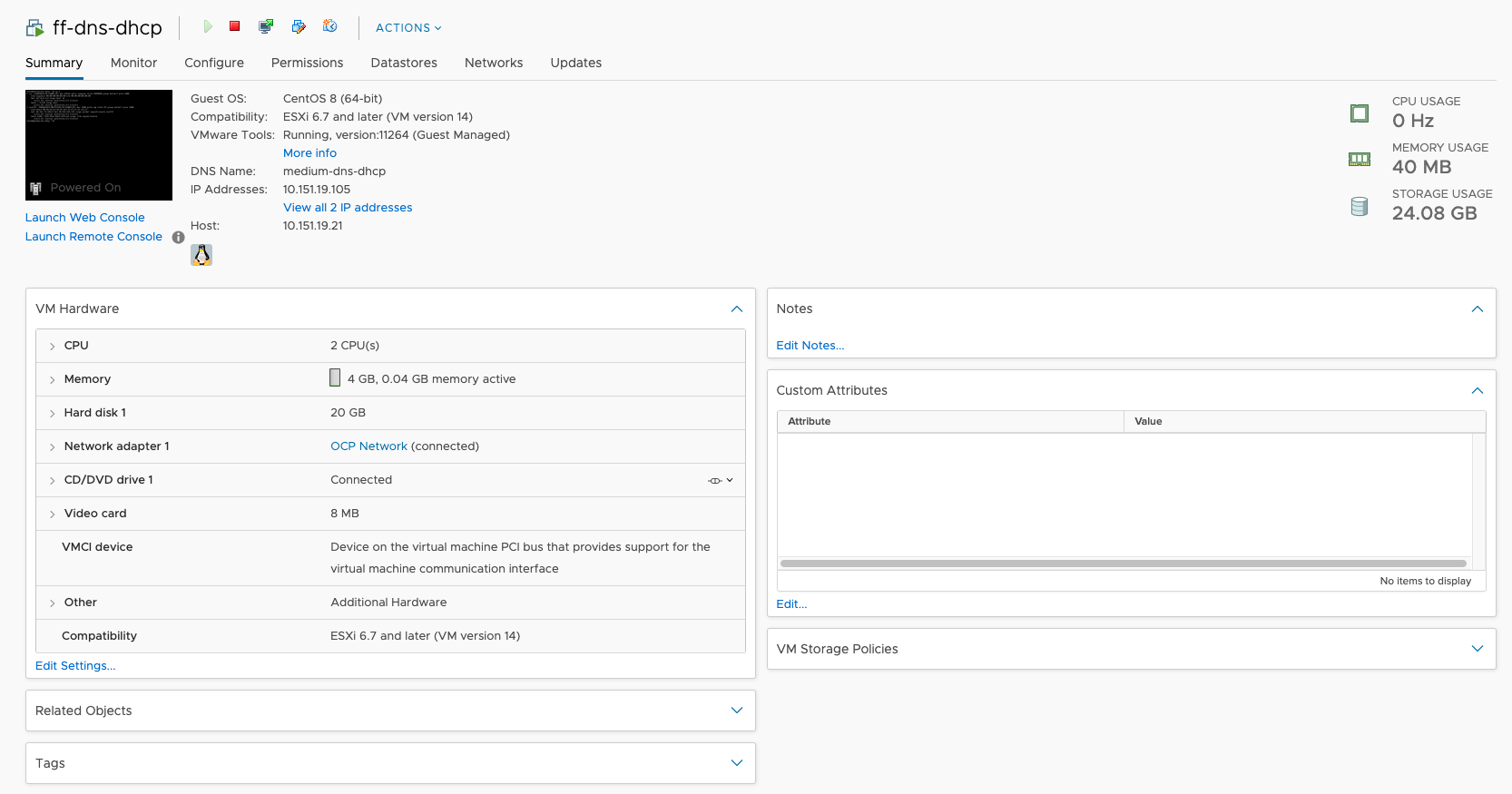

To set up the servers, I will create 2 virtual machines on vSphere with the following specs: 2 CPU’s, 4GB RAM and 20GB Storage. Both machines will be running on CentOS 8, one will be used to configure the DNS/DHCP server and the other will host the load balancer.

When the installation has finished, we can log into the machine with the user accounts that were created during the installation. Before we can do anything, we have to give a static IP to the server to access the internet so we can move along to the following steps.

Edit the network interface:

vim /etc/sysconfig/network-scripts/ifcfg-ens192TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=none IPADDR=10.151.19.105 NETMASK=255.255.255.0 GATEWAY=10.151.19.254 DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=ens192 UUID=729fad1a-f943-4467-941c-3befee0158b1 DEVICE=ens192 ONBOOT=yes

Set the boot protocol to none, assign a static IP address/netmask, configure the gateway and set onboot to yes so the IP get assigned automatically when rebooting the machine.

Now restart the network interface:

ifdown ens192 ifup ens192

And we should be able to access the internet.

[root@ff-dns-dhcp ~]# ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data. 64 bytes from 8.8.8.8: icmp_seq=1 ttl=113 time=5.83 ms 64 bytes from 8.8.8.8: icmp_seq=2 ttl=113 time=6.03 ms

Note: assigning a static IP address is only necessary for the first server that will act as a DHCP server. When setting up other servers after the DHCP is configured, the boot protocol of that server can be set to DHCP and it will automatically receive an IP address.

Configuring the DHCP server

Install the dhcp server package:

yum -y install dhcp-server

Edit the dhcp config at /etc/dhcp/dhcpd.conf

sudo vi /etc/dhcp/dhcpd.conf

Here I will configure a DHCP range from 10.151.19.100 to 10.151.19.200

This means that each machine that uses DHCP as boot protocol will get an IP within this range.

I’ve also configured IP addresses based on the mac address of each OCP machine. This way we already know the IP’s that the machines will get so we can configure the DNS server in later steps.

option domain-name "flowfactor.internal"; option domain-name-servers 10.151.19.105, 10.0.10.6, 10.0.13.44;default-lease-time 600; max-lease-time 7200;authoritative;log-facility local7;subnet 10.151.19.0 netmask 255.255.255.0 { range 10.151.19.100 10.151.19.200; option domain-name "flowfactor.internal"; option domain-name-servers 10.151.19.105; option routers 10.151.19.254; option broadcast-address 10.151.19.255; default-lease-time 600; max-lease-time 7200; }host ocp1-bootstrap { option host-name "ff-bootstrap-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:59; fixed-address 10.151.19.209; }host ocp1-master1 { option host-name "ff-master1-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:60; fixed-address 10.151.19.201; }host ocp1-master2 { option host-name "ff-master2-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:61; fixed-address 10.151.19.202; }host ocp1-master3 { option host-name "ff-master3-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:62; fixed-address 10.151.19.203; }host ocp1-worker1 { option host-name "ff-worker1-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:63; fixed-address 10.151.19.204; }host ocp1-worker2 { option host-name "ff-worker2-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:64; fixed-address 10.151.19.205; }host ocp1-worker3 { option host-name "ff-worker3-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:65; fixed-address 10.151.19.206; }host ocp1-worker4 { option host-name "ff-worker4-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:66; fixed-address 10.151.19.207; }host ocp1-worker5 { option host-name "ff-worker5-ocp1.flowfactor.internal"; hardware ethernet 00:50:56:b4:16:67; fixed-address 10.151.19.208; }

Now we only need to start/enable the DHCP service and allow it to the firewall.

sudo systemctl start dhcpd sudo systemctl enable dhcpdsudo firewall-cmd --add-service=dhcp --permanent sudo firewall-cmd --reload

Now, let’s install Bind DNS server:

Install the bind packages:

yum -y install bind bind-utils

Edit the bind config at /etc/named.conf

vim /etc/named.conf

To listen on all IP’s, comment out the following lines:

# listen-on port 53 { 127.0.0.1; };

# listen-on-v6 port 53 { ::1; };

Allow queries from your network, or set it to any to allow all queries

allow-query { localhost; 10.151.19.0/24; };# ORallow-query { localhost; any; };

Creating forward and reverse DNS zones:

To create these DNS zones, add the following at the bottom of /etc/named.conf

# Forward zone zone "flowfactor.internal" IN { type master; file "fwd.flowfactor.internal.db"; allow-update { none; }; };# Reverse zone zone "19.151.10.in-addr.arpa" IN { type master; file "rev.19.151.10.db"; allow-update { none; }; };

Change the forward DNS domain name to your own base domain and change the name of the reverse zone to the reversed first 3 parts of your IP address + .in-addr.arpa

Creating DNS zone files

To define DNS records, we first have to create a zone file for both the forward and reverse under the /var/named/ directory. Let’s start by creating the forward zone file:

vim /var/named/fwd.flowfactor.internal.db

In this file, we have to create a DNS A record for every OCP machine in the network, as well as for our current server and load balancer.

OpenShift also requires some special records:

- Two A records that point to the load balancer, one for the internal and one for the external API.

- One wildcard DNS record that also points to the load balancer, this is used to create URLs for applications in OpenShift.

- etcd A records that point to the corresponding master node.

- _etcd SRV records that point to the etcd A records on port 2380. These will be used by the nodes to discover each other and determine the health of the cluster.

$TTL 86400 @ IN SOA ff-dns-dhcp.flowfactor.internal. root.flowfactor.internal. ( 1 ; Serial 604800 ; Refresh 86400 ; Retry 2419200 ; Expire 86400 ; Negative Cache TTL ); @ IN NS ff-dns-dhcp.flowfactor.internal. $ORIGIN flowfactor.internal. ff-dns-dhcp IN A 10.151.19.105ff-bastion-ocp1 IN A 10.151.19.133 ff-loadbalancer-ocp1 IN A 10.151.19.177ff-bootstrap-ocp1 IN A 10.151.19.209 ff-master1-ocp1 IN A 10.151.19.201 ff-master2-ocp1 IN A 10.151.19.202 ff-master3-ocp1 IN A 10.151.19.203ff-worker1-ocp1 IN A 10.151.19.204 ff-worker2-ocp1 IN A 10.151.19.205 ff-worker3-ocp1 IN A 10.151.19.206 ff-worker4-ocp1 IN A 10.151.19.207 ff-worker5-ocp1 IN A 10.151.19.208$ORIGIN ocp1.flowfactor.internal. etcd-0 IN A 10.151.19.201 etcd-1 IN A 10.151.19.202 etcd-2 IN A 10.151.19.203_etcd-server-ssl._tcp IN SRV 0 10 2380 etcd-0 _etcd-server-ssl._tcp IN SRV 0 10 2380 etcd-1 _etcd-server-ssl._tcp IN SRV 0 10 2380 etcd-2api IN A 10.151.19.177 api-int IN A 10.151.19.177$ORIGIN apps.ocp1.flowfactor.internal. * IN A 10.151.19.177

Secondly, we have to create the reverse lookup file:

vim /var/named/rev.19.151.10.db

The reverse records are important because these will determine the hostname of the CoreOS OCP machines, during the installation each machine will do a reverse lookup of its own IP address and set its hostname accordingly.

$TTL 86400 @ IN SOA ff-dns-dhcp.flowfactor.internal. root.flowfactor.internal. ( 1 ; Serial 604800 ; Refresh 86400 ; Retry 2419200 ; Expire 86400 ; Negative Cache TTL ); @ IN NS ff-dns-dhcp.flowfactor.internal.105 IN PTR ff-dns-dhcp.flowfactor.internal.133 IN PTR ff-bastion-ocp1.flowfactor.internal. 177 IN PTR ff-loadbalancer-ocp1.flowfactor.internal.209 IN PTR ff-bootstrap-ocp1.flowfactor.internal. 201 IN PTR ff-master1-ocp1.flowfactor.internal. 202 IN PTR ff-master2-ocp1.flowfactor.internal. 203 IN PTR ff-master3-ocp1.flowfactor.internal.201 IN PTR etcd-0.ocp1.flowfactor.internal. 202 IN PTR etcd-1.ocp1.flowfactor.internal. 203 IN PTR etcd-2.ocp1.flowfactor.internal.204 IN PTR ff-worker1-ocp1.flowfactor.internal. 205 IN PTR ff-worker2-ocp1.flowfactor.internal. 206 IN PTR ff-worker3-ocp1.flowfactor.internal. 207 IN PTR ff-worker4-ocp1.flowfactor.internal. 208 IN PTR ff-worker5-ocp1.flowfactor.internal.

Service and firewall

The DNS server should be ready now, the only thing left to do is to restart/enable the service and allow DNS in the firewall.

systemctl restart named systemctl enable named

Add the DNS service and reload the firewall:

firewall-cmd --permanent --zone=public --add-service=dns firewall-cmd --reload

Configuring the load balancer

Install the HAProxy package:

yum -y install haproxy

Edit the HAProxy config at /etc/haproxy/haproxy.cfg

vim /etc/haproxy/haproxy.cfg

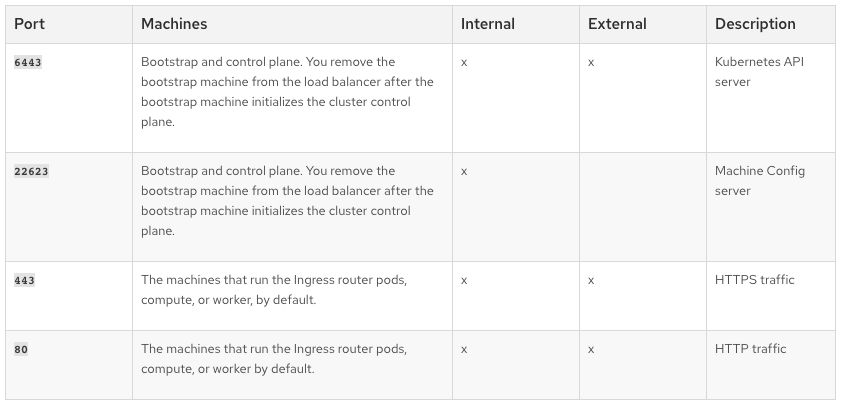

Here we will configure 4 load balancers, one for each port that OpenShift needs.

OpenShift load balancer ports

Port 6443 and 22623 will be used by the internal and external API as ports for API calls and machine configs. Port 443 and 80 are the secure and insecure ports that are used by the ingress load balancer to expose applications.

#------------------------------------------------------------------- # Global settings #------------------------------------------------------------------- global log 127.0.0.1 local2chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon# turn on stats unix socket stats socket /var/lib/haproxy/stats# utilize system-wide crypto-policies #ssl-default-bind-ciphers PROFILE=SYSTEM #ssl-default-server-ciphers PROFILE=SYSTEM#------------------------------------------------------------------- # common defaults that all the 'listen' and 'backend' sections will # use if not designated in their block #------------------------------------------------------------------- defaults mode tcp log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000#------------------------------------------------------------------- # main frontend which proxys to the backends #-------------------------------------------------------------------frontend api bind 10.151.19.177:6443 default_backend controlplaneapifrontend machineconfig bind 10.151.19.177:22623 default_backend controlplanemcfrontend tlsrouter bind 10.151.19.177:443 default_backend securefrontend insecurerouter bind 10.151.19.177:80 default_backend insecure#------------------------------------------------------------------- # static backend #-------------------------------------------------------------------backend controlplaneapi balance source server bootstrap 10.151.19.209:6443 check server master0 10.151.19.201:6443 check server master1 10.151.19.202:6443 check server master2 10.151.19.203:6443 checkbackend controlplanemc balance source server bootstrap 10.151.19.209:22623 check server master0 10.151.19.201:22623 check server master1 10.151.19.202:22623 check server master2 10.151.19.203:22623 checkbackend secure balance source server compute0 10.151.19.204:443 check server compute1 10.151.19.205:443 check server compute2 10.151.19.206:443 check server compute3 10.151.19.207:443 check server compute4 10.151.19.208:443 checkbackend insecure balance source server compute0 10.151.19.204:80 check server compute1 10.151.19.205:80 check server compute2 10.151.19.206:80 check server compute3 10.151.19.207:80 check server compute4 10.151.19.208:80 check

This is all there is in regards to the configuration of the load balancers. But when trying to start the HAProxy service, you might run into the following issue:

[root@medium-dns-dhcp ~]# systemctl start haproxy Job for haproxy.service failed because the control process exited with error code. See "systemctl status haproxy.service" and "journalctl -xe" for details.[root@medium-dns-dhcp ~]# systemctl status haproxy.service ● haproxy.service - HAProxy Load Balancer Loaded: loaded (/usr/lib/systemd/system/haproxy.service; disabled; vendor preset: disabled) Active: failed (Result: exit-code) since Fri 2020-07-10 11:01:57 EDT; 21s ago Process: 30524 ExecStart=/usr/sbin/haproxy -Ws -f $CONFIG -p $PIDFILE (code=exited, status=1/FAILURE) Process: 30522 ExecStartPre=/usr/sbin/haproxy -f $CONFIG -c -q (code=exited, status=0/SUCCESS) Main PID: 30524 (code=exited, status=1/FAILURE)Jul 10 11:01:57 medium-dns-dhcp haproxy[30524]: [WARNING] 191/110157 (30524) : config : 'option forwardfor' ignored for backend 'controlplanemc' as it requires HTTP mode. Jul 10 11:01:57 medium-dns-dhcp haproxy[30524]: [WARNING] 191/110157 (30524) : config : 'option forwardfor' ignored for backend 'secure' as it requires HTTP mode. Jul 10 11:01:57 medium-dns-dhcp haproxy[30524]: [WARNING] 191/110157 (30524) : config : 'option forwardfor' ignored for backend 'insecure' as it requires HTTP mode. Jul 10 11:01:57 medium-dns-dhcp haproxy[30524]: [ALERT] 191/110157 (30524) : Starting frontend api: cannot bind socket [10.151.19.177:6443] Jul 10 11:01:57 medium-dns-dhcp haproxy[30524]: [ALERT] 191/110157 (30524) : Starting frontend machineconfig: cannot bind socket [10.151.19.177:22623] Jul 10 11:01:57 medium-dns-dhcp haproxy[30524]: [ALERT] 191/110157 (30524) : Starting frontend tlsrouter: cannot bind socket [10.151.19.177:443] Jul 10 11:01:57 medium-dns-dhcp haproxy[30524]: [ALERT] 191/110157 (30524) : Starting frontend insecurerouter: cannot bind socket [10.151.19.177:80] Jul 10 11:01:57 medium-dns-dhcp systemd[1]: haproxy.service: Main process exited, code=exited, status=1/FAILURE Jul 10 11:01:57 medium-dns-dhcp systemd[1]: haproxy.service: Failed with result 'exit-code'. Jul 10 11:01:57 medium-dns-dhcp systemd[1]: Failed to start HAProxy Load Balancer.

To fix this issue, simply run the following commands:

setsebool -P haproxy_connect_any=1 echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf

Now you should be able to start and enable HAProxy without any errors.

systemctl restart haproxy systemctl enable haproxy

Wrapping up

Well, this is the end of the tutorial. Now everything should be all set to start your OpenShift installation. I hope you enjoyed the tutorial. If it was in any way helpful to you, please give a clap and maybe take a look at one of our other articles.

Sorry, the comment form is closed at this time.